Chapter 5. SIFT and feature matching

In this tutorial we’ll look at how to compare images to each other.

Specifically, we’ll use a popular local

feature descriptor called

SIFT to extract some

interesting points from images and describe

them in a standard way. Once we have these local features and their

descriptions, we can match local features to each other and

therefore compare images to each other, or find a visual query image

within a target image, as we will do in this tutorial.

Firstly, lets load up a couple of images. Here we have a magazine

and a scene containing the magazine:

MBFImage query = ImageUtilities.readMBF(new URL("http://dl.dropbox.com/u/8705593/query.jpg"));

MBFImage target = ImageUtilities.readMBF(new URL("http://dl.dropbox.com/u/8705593/target.jpg"));

The first step is feature extraction. We’ll use the

difference-of-Gaussian feature

detector which we describe with a SIFT

descriptor. The features we find are described in a way

which makes them invariant to size changes, rotation and position.

These are quite powerful features and are used in a variety of

tasks. The standard implementation of SIFT in OpenIMAJ can be found

in the DoGSIFTEngine class:

DoGSIFTEngine engine = new DoGSIFTEngine();

LocalFeatureList<Keypoint> queryKeypoints = engine.findFeatures(query.flatten());

LocalFeatureList<Keypoint> targetKeypoints = engine.findFeatures(target.flatten());

Once the engine is constructed, we can use it to extract

Keypoint objects from our images. The

Keypoint class contain a public field called

ivec which, in the case of a standard SIFT

descriptor is a 128 dimensional description of a patch of pixels

around a detected point. Various distance measures can be used to

compare Keypoints to

Keypoints.

The challenge in comparing Keypoints is trying to

figure out which Keypoints match between

Keypoints from some query image and those from

some target. The most basic approach is to take a given

Keypoint in the query and find the

Keypoint that is closest in the target. A minor

improvement on top of this is to disregard those points which match

well with MANY other points in the target. Such point are considered

non-descriptive. Matching can be achieved in OpenIMAJ using the

BasicMatcher. Next we’ll construct and setup such

a matcher:

LocalFeatureMatcher<Keypoint> matcher = new BasicMatcher<Keypoint>(80);

matcher.setModelFeatures(queryKeypoints);

matcher.findMatches(targetKeypoints);

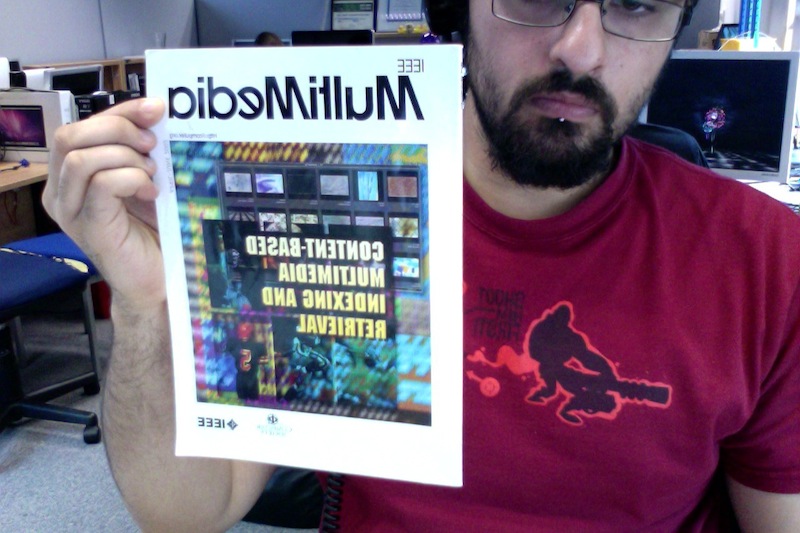

We can now draw the matches between these two images found with this

basic matcher using the MatchingUtilities class:

MBFImage basicMatches = MatchingUtilities.drawMatches(query, target, matcher.getMatches(), RGBColour.RED);

DisplayUtilities.display(basicMatches);

As you can see, the basic matcher finds many matches, many of which

are clearly incorrect. A more advanced approach is to filter the

matches based on a given geometric model. One way of achieving this

in OpenIMAJ is to use a

ConsistentLocalFeatureMatcher which given an

internal matcher and a model fitter configured to fit a geometric model, finds which

matches given by the internal matcher are consistent with respect to

the model and are therefore likely to be correct.

To demonstrate this, we’ll use an algorithm called Random Sample

Consensus (RANSAC) to fit a geometric model called an

Affine transform to the initial

set of matches. This is achieved by iteratively selecting a random

set of matches, learning a model from this random set and then

testing the remaining matches against the learnt model.

![[Tip]](images/tip.png) |

Tip |

| An Affine transform models the transformation between two parallelograms. |

We’ll now set up a RANSAC model fitter configured to find Affine Transforms (using the RobustAffineTransformEstimator helper class) and our consistent matcher:

RobustAffineTransformEstimator modelFitter = new RobustAffineTransformEstimator(5.0, 1500,

new RANSAC.PercentageInliersStoppingCondition(0.5));

matcher = new ConsistentLocalFeatureMatcher2d<Keypoint>(

new FastBasicKeypointMatcher<Keypoint>(8), modelFitter);

matcher.setModelFeatures(queryKeypoints);

matcher.findMatches(targetKeypoints);

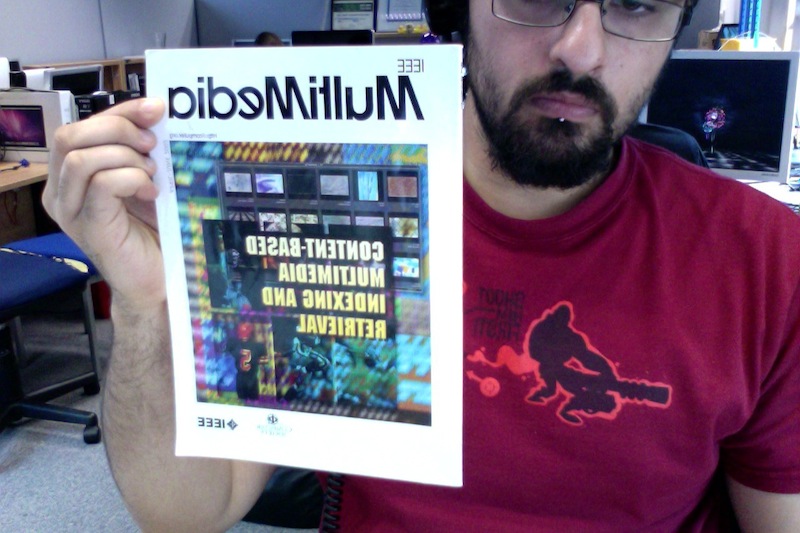

MBFImage consistentMatches = MatchingUtilities.drawMatches(query, target, matcher.getMatches(),

RGBColour.RED);

DisplayUtilities.display(consistentMatches);

The AffineTransformModel class models a

two-dimensional Affine transform in OpenIMAJ. The RobustAffineTransformEstimator

class provides a method getModel() which returns the internal Affine Transform model

whose parameters are optimised during the fitting process driven by the ConsistentLocalFeatureMatcher2d.

An interesting byproduct of using the ConsistentLocalFeatureMatcher2d is that the

AffineTransformModel returned by getModel() contains the best transform

matrix to go from the query to the target. We can take advantage of

this by transforming the bounding box of our query with the

transform estimated in the AffineTransformModel,

therefore we can draw a polygon around the estimated location of the

query within the target:

target.drawShape(

query.getBounds().transform(modelFitter.getModel().getTransform().inverse()), 3,RGBColour.BLUE);

DisplayUtilities.display(target);

5.1.1. Exercise 1: Different matchers

Experiment with different matchers; try the

BasicTwoWayMatcher for example.

5.1.2. Exercise 2: Different models

Experiment with different models (such as a

HomographyModel) in the consistent matcher. The RobustHomographyEstimator helper class can be used

to construct an object that fits the HomographyModel model. You can also experiment with an alternative robust fitting algorithm to RANSAC called Least Median of Squares (LMedS)

through the RobustHomographyEstimator.

![[Tip]](images/tip.png) |

Tip |

| A HomographyModel models a planar Homography between two planes. Planar Homographies are more general than Affine transforms

and map quadrilaterals to quadrilaterals.

|

![[Tip]](images/tip.png)